An unlikely candidate to be placed under the category of Action Oriented Disruptors, is one of my favorites, Alan Turing.

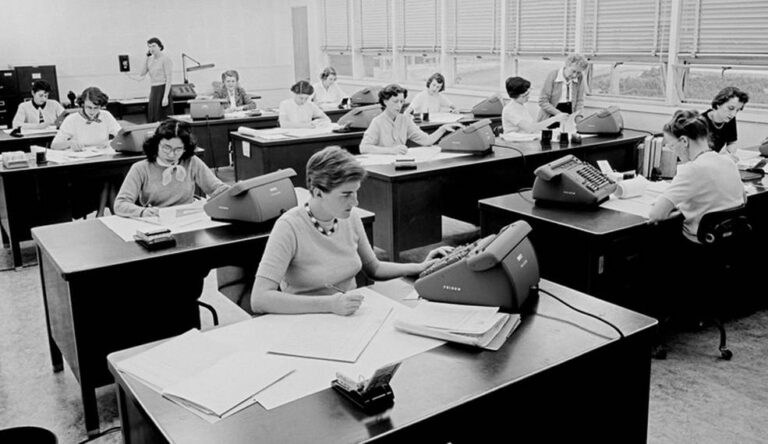

According to the Oxford English Dictionary, the first known use of computer was in a book in 1613 called The Yong Mans Gleanings by Richard Brathwait. In this context, the term referred to a human computer, a person who carried out calculations or computations.

In the 1630s, computer, meaning to compute, originates from the French word compter, which is derived from the Latin word: computare. However, the word for “computer” in French is ordinateur.

And in the 1640s, the verb “compute” meant one whose occupation is to make arithmetical calculations.

It was very common for companies and government departments to advertise job titles as ‘computers’ – right up to the time in the 1970s when the word also began to be used for electronic devices as well.

Before the IBM 701, the 704 (for the Vanguard project), and later, the IBM 7090 in 1961, NASA used ‘human’ computers, a talented team of women whose job was to calculate anything from how many rockets were needed to make a plane airborne to what kind of rocket propellants were needed to propel a spacecraft.

These calculations were mostly done by hand, with pencil and graph paper, often taking more than a week to complete and filling up six to eight notebooks with data and formulas. Later, it was common that some went on to become the earliest computer programmers.

A talented team of women, who were around since JPL’s beginnings in 1936 and who were known as computers, were responsible for the number-crunching of launch windows, trajectories, fuel consumption, and other details that helped make the U.S. space program a success. [Not shown was Janez Lawson, the first African American hired by JPL in 1953 as a computer, then later as a chemical engineer]Credits: NASA/JPL-Caltech

In 1962 Arthur C. Clarke, who wrote the novel – and co-wrote the screenplay for the movie – “2001: A Space Odyssey”, visited Bell Labs before putting the finishing touches on the work. There, he was treated to a performance of the song ‘Daisy Bell’ (or, ‘A Bicycle Built for Two’) by the IBM 704 computer. This inspired Clarke to have HAL sing the song as an homage to the programmers of the 704 at Bell Labs.

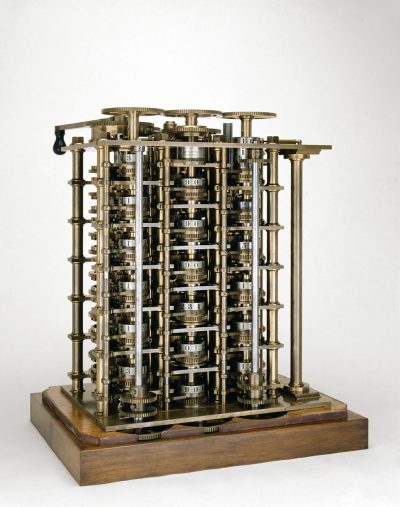

What’s the Difference Engine?

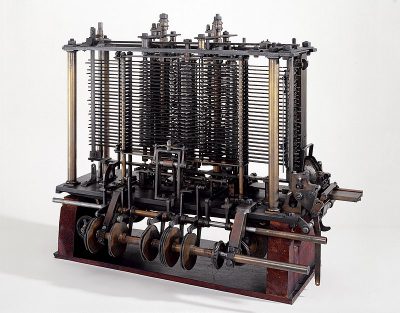

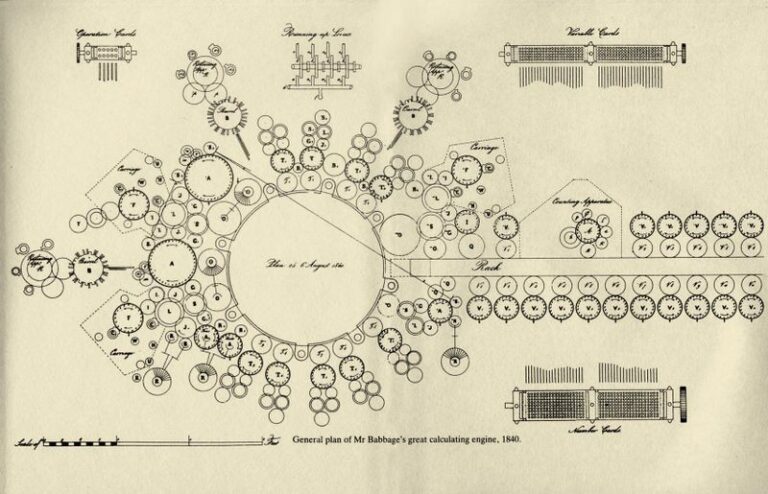

The father of computers is often thought to be Charles Babbage, who is most known for his invention of the Difference Engine (the earliest version of a calculating computer, 1821), then later, came the Analytical Engine, which (thanks to Ada Lovelace’s futuristic interpretation of what his machine could ultimately become), led to the idea of what would later become the modern-day computer.

The Analytical Engine consisted of an Arithmetic Logic Unit or ALU, an integrated memory, and a basic flow control involving branching and loops, which in today’s terms would be likened to a very impressive calculator. It was a modernized version of the Difference Engine also created by Babbage. While the Difference Engine came into existence in the 1820s (though it could not multiply, it could add polynomial equations, which was still impressive for the time). The prototype of the Analytical Engine was then later produced in 1837.

Babbage’s idea came from observing and dissecting the workings of the Jacquard Loom (1804), an impressive almost completely automated device for weaving together complex and intricate patterns in order to make rugs, carpets, and other tapestries.

The idea for this automated loom was based upon earlier inventions by the Frenchmen Basile Bouchon (1725), Jean Baptiste Falcon (1728), and Jacques Vaucanson (1740).

It worked through a series of punch cards laid together in an elegant way in order to create complex patterns.

Babbage had an impressive mind, and for his time he was described as “pre-eminent” among the many polymaths of his century. Ultimately his device was a massive calculator able to rapidly handle large equations at lightning speeds (for the time).

Even though one had not been built during Babbage’s life, his Analytical Engine incorporated an arithmetic logic unit, control flow in the form of conditional branching and loops, and integrated memory, quite impressive for its time, making it the first design for a general-purpose computer that could be described as revolutionary. There was even a kind of central processing unit (CPU).

It was an ingenious device, but the British Association for the Advancement of Science could not see its value based on the enormously high cost it would have taken to build such a machine. The machine would be hand-cranked initially in order to work out its computing process. The Analytical Engine, designed by Babbage, was never built until much later, for historic and scientific purposes only.

Modern Computing

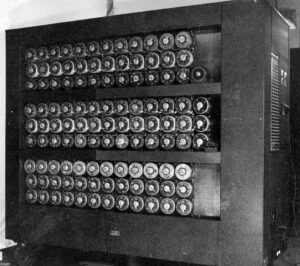

Turing on the other hand is considered the father of the modern computer. Also considered a scientist and mathematician, Alan Turing focused his early ideas on ciphers, working on breaking Germany’s Enigma Machine during World War II. Turing developed “The Bombe” (Bletchley Park in 1943), referred to (only in the movie ‘Imitation Game’ as Christopher, named after Turing’s first friend and boyhood crush Christopher Morcom), which would be the device used to break the undecipherable enigma code.

This ‘computer’ was an electro-mechanical device designed to decipher Nazi coded messages, thereby giving the British the advantage during World War II. Gordon Welchman, a mathematician collaborated with Turing to design the machine.

It is estimated that cracking intercepted codes helped to end the war years earlier than would have otherwise been the case, saving millions of lives in the process.

Turing made educated guesses at certain words the coded enigma messages would contain. For example, they knew that every day the Germans sent out a ‘weather report’, so a word for ‘weather’ could successfully be deciphered. They also figured out that most messages would also contain the phrase ‘heil Hitler’. These types of patterns helped them to calculate the daily settings on the Enigma machines.

Another breakthrough soon came with the discovery that numbers were spelled out as whole words rather than just single letters. This prompted Turing to go back and review previous messages that had been decrypted, where he learned that the German word for one – ‘eins’ – appeared in almost every message. From this, he created the Eins Catalogue, which helped him to automate the crib process.

CRIB (known as a slang term for ‘cheat’) in cryptology is a translation used to help create patterns towards breaking a code. The crib acts as a sort of ‘clue’ as to what the code pattern might be trying to say.

(It should be noted that Steve Jobs did not name his company Apple as an homage to Alan Turing who ate an apple laced with cyanide to commit suicide.)

Action Hero

Action isn’t just related to a physical act. No cape. No superpowers. Action is the forward movement someone takes in order to change something – hopefully for the better. Action is often the next step in the creation process.

Generations of research went into what scientists called “Turing Machines,” and what we now refer to as computers.

“This is only a foretaste of what is to come, and only the shadow of what is going to be. We have to have some experience with the machine before we really know its capabilities . . . I do not see why it should not enter any one of the fields normally covered by the human intellect, and eventually, compete on equal terms.” ~ Alan Turing

(Quoted in The Times, 11 June 1949: ‘The Mechanical Brain’)

The ‘conceptual’ stored-program digital computer

John Von Neumann repeatedly emphasized the fundamental importance of ‘On Computable Numbers’ in lectures and in correspondence. In 1946 he wrote to the mathematician Norbert Wiener of ‘the great positive contribution of Turing’—Turing’s mathematical demonstration that ‘one, definite mechanism can be “universal”. In 1948, in a lecture entitled ‘The General and Logical Theory of Automata’, von Neumann said:

“The English logician, Turing, about twelve years ago, attacked the following problem. He wanted to give a general definition of what is meant by a computing automaton. Turing carried out a careful analysis of what mathematical processes can be affected by automata of this type. He also introduced and analyzed the concept of a ‘universal automaton’. An automaton is ‘universal’ if any sequence that can be produced by any automaton at all can also be solved by this particular automaton. It will, of course, require in general a different instruction for this purpose.”

The Main Result of the Turing Theory. We might expect a priori that this is impossible.

How can there be an automaton which is at least as effective as any conceivable automaton, including, for example, one of twice its size and complexity? Turing, nevertheless, proved that this was indeed possible. The following year, in a lecture entitled ‘Rigorous Theories of Control and Information,’ von Neumann said:

“The importance of Turing’s research is just this: that if you construct an automaton right, then any additional requirements about the automaton can be handled by sufficiently elaborate instructions. This is only true if [the automaton] is sufficiently complicated if it has reached a certain minimal level of complexity.”

In other words, there is a very definite finite point where an automaton of this complexity can, when given suitable instructions, do anything that can be done by automata at all.

Many books on the history of computing in the United States make no mention of Turing. No doubt this is in part explained by the absence of any explicit reference to Turing’s work in the series of technical reports in which von Neumann, with various co-authors, set out a logical design for an electronic stored program digital computer.

Nevertheless, there is evidence in these documents of von Neumann’s knowledge of ‘On Computable Numbers.’ For example, in the report entitled ‘Preliminary Discussion of the Logical Design of an Electronic Computing Instrument’ (June 1946), von Neumann and his co-authors, Burks and Goldstine—both former members of the ENIAC group, who had joined von Neumann at the Institute for Advanced Study—discussed issues like:

Memory-storage capabilities, arithmetic operations for processing purposes, the controller (for automation & order functioning), and a signal to let the operator know when it has completed its computational process.

Turing’s concept set the wheels in motion. His design for the Automatic Computing Engine (ACE) was the first complete specification of an electronic stored-program all-purpose digital computer. Had Turing’s ACE been built (properly) as he’d originally planned, it would have had vastly more memory than any of the other early computers, as well as being infinitely faster.

With the help of Ada Lovelace, of course, all of these men had important parts to play in the inception of what we know to be today’s computer. But Turing was already thinking about the future… AI. His ideas were already way ahead of us and like other futurists (Ramanujan, whose concepts introduced the idea of black holes, or Tesla whose ideas of alternating current are the lifeblood of electricity today). And we are still playing catch up.

Alan Turing was an action-oriented inventor. In other words, he was a builder. His concepts and ideas became the gateway for today’s technology, and his work had major forward-thinking. But none of this was theory for him. He built the devices he’d conceived of and showed their advanced application time and again. His genius was his vision of what the computer would ultimately become. And we are ultimately grateful for his ideas.